Latest Digital Measurement Clinic

As promised, today is my 2nd session: a short Webinar offering thought-starters on thorniest trends/issues impacting your digital marketing/advertising strategies this year…1 PM Eastern and replayed @ 3PM.

http://app.webinarjam.net/register/20164/1b40c3b072

Enjoy!

Encima Digital Media Measurement 101 Web Clinic

This Thursday morning @ noon Central (2/23/16), doing a short Webinar on the basic frameworks for Digital Media Measurement…first of two!

What’s the difference between viewthrough and viewability?

In case you haven’t noticed, digital marketing performance is more important than ever. With advertiser’s increasing need for accountability and measurement tools evolving to meet that need, there is major and growing interest in associated ad analytics or adverlytics. More specifically, display viewability measurement and attribution are hot topics. Yet, before the explosion in ad viewability technologies and an enthusiastic trade group response to standardize (IAB, MRC), viewthrough remains complicated and mysterious.

3rd party ad serving (3PAS). That means an advertiser’s digital media agency centrally manages the serving of their display ad campaign across multiple Web sites and/or ad networks. Typical tools used for this purpose include Sizmek, Pointroll, Google DoubleClick and Atlas. The beauty of this approach is having one report that consolidates one or multiple campaign performance metrics across multiple media vendors. The advertiser-centric approach and even allows for performance analysis by placement, ad size or creative treatment across media vendors. Prior to this, agencies had to collect the reports from each media vendor and doing cross-dimension analysis required advanced Excel manipulation. Included in the consolidated report was usually impressions, clicks, media cost a calculated click rate and effective CPM (cost per thousand). Potentially average frequency of ad delivery could also be reported.

- Viewthrough – It’s all about response measurement. Advertisers ultimately should be very keen on measuring viewthrough from display media if they are interested in Web site engagement and even more so if an online conversion is possible (offline measurement is also possible).

- Viewability– Think front-end of the advertising process, i.e. the inputs. The focus is on doing what branding has always sought to do through media campaigns: create an impression, influence behavior or change preferences.

What’s the difference between viewthrough and viewability?

New Post for busy digital marketers!

In case you haven’t noticed, digital marketing performance is more important than ever. With advertiser’s increasing need for accountability and measurement tools evolving to meet that need, there is major and growing interest in associated ad analytics or adverlytics. More specifically, display viewability measurement and attribution are hot topics. Yet, before the explosion in ad viewability technologies and an enthusiastic trade group response to standardize (IAB, MRC), viewthrough remains complicated and mysterious.

Full article cross-posted on Encima Digital Blog and Viewthrough Measurement Consortium site.

RIP: Beautiful Mind

Nash Equilibrium

Here is a short deck explaining the application in Game Theory.

In 1994 he shared the Nobel Memorial Prize in Economics. He was the subject of a 1998 book and 2001 film, A Beautiful Mind.

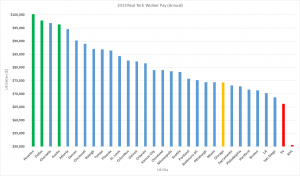

Real Tech Worker Pay Revisited (2013 Data)

The problem with this narrative it is wrong-minded and very misleading. So far, no retraction from the author or publisher. The fact is that when compared to NYC and Silicon Valley pay and when just looking at the DICE raw numbers, it appears that workers are better off in those markets because the pay is higher.

Although this is true in Nominal Dollars, reality is different as each area has different costs of living for the same goods and services, e.g. housing, groceries, utilities and so on. Often used for analysis of inflation over time, this kind of method is referred to as Real and Nominal Value.

Click to Expand

With that in mind, the data was updated with 2013 salaries expanding the number of cities reviewed from 10 to 31 US Cities. Of course, it tells a very different story from what Crain’s Chicago Business had suggested last year.

Some interesting highlights of the data:

- Texas cities Houston, Dallas and Austin are some of the best deals for workers with Charlotte, NC and Atlanta also looking good (left-most on graph)

- NYC and Silicon Valley are absolutely the worse deal for workers (right most on graph)

- Chicago (my current home base) looks good better than the usual suspects, but it is well below mean and median, i.e. in comparison to many other US cities, which may have something to do with Illinois, Cook County and Chicago financial shenanigans.

- Mean and Median were close at $80,345 and $79,050 respectively

- Quality of living arguments can be inserted at this point (cold winters) as well as the impact of high-taxes on local economy

| Rank | Market Area | Local Avg | Index | Real Dollars | Comments |

| 1 | Houston | $92,475 | 92.2 | $ 100,298 | |

| 2 | Dallas | $89,952 | 91.9 | $ 97,880 | |

| 3 | Charlotte | $90,352 | 93.2 | $ 96,944 | |

| 4 | Austin | $91,994 | 95.5 | $ 96,329 | |

| 5 | Atlanta | $90,474 | 95.6 | $ 94,638 | |

| 6 | Denver | $93,195 | 103.2 | $ 90,305 | |

| 7 | Cincinnati | $83,537 | 93.8 | $ 89,059 | |

| 8 | Raleigh | $85,559 | 98.2 | $ 87,127 | |

| 9 | Tampa | $80,273 | 92.4 | $ 86,876 | |

| 10 | Phoenix | $87,114 | 100.7 | $ 86,508 | |

| 11 | St. Louis | $76,220 | 90.4 | $ 84,314 | |

| 12 | Columbus | $76,035 | 92.0 | $ 82,647 | |

| 13 | Detroit | $81,832 | 99.4 | $ 82,326 | |

| 14 | Orlando | $79,805 | 97.8 | $ 81,600 | |

| 15 | Kansas City | $77,329 | 97.8 | $ 79,069 | |

| 16 | Cleveland | $79,840 | 101.0 | $ 79,050 | |

| 17 | Minneapolis | $87,227 | 111.0 | $ 78,583 | |

| 18 | Seattle | $95,048 | 121.4 | $ 78,293 | |

| 19 | Portland | $84,295 | 111.3 | $ 75,737 | |

| 20 | Baltimore + DC | $97,588 | 129.8 | $ 75,212 | avg |

| 21 | Pittsburgh | $68,100 | 91.5 | $ 74,426 | |

| 22 | Miami | $78,872 | 106.0 | $ 74,408 | |

| 23 | Chicago | $86,574 | 116.5 | $ 74,312 | |

| 24 | Sacramento | $85,100 | 116.2 | $ 73,236 | |

| 25 | Philadelphia | $92,138 | 126.5 | $ 72,836 | |

| 26 | Hartford | $87,265 | 121.8 | $ 71,646 | |

| 27 | Boston | $94,531 | 132.5 | $ 71,344 | |

| 28 | LA | $95,815 | 136.4 | $ 70,246 | |

| 29 | San Diego | $90,849 | 132.3 | $ 68,669 | |

| 30 | SV | $108,603 | 164.0 | $ 66,221 | |

| 31 | NYC | $93,915 | 185.8 | $ 50,546 | 3 borough avg |

| Mean | $87,158 | $111 | $80,345 | ||

| Median | $87,227 | $101 | $79,050 | ||

| Mode | #N/A | 97.8 | #N/A | ||

| Max | $108,603 | $186 | $100,298 | ||

| Min | $68,100 | $90 | $50,546 | ||

| Std Dev | $8,052 | $22 | $10,837 |

SOURCES: DICE US Salary Survey 2013 and US City Cost of Living (Infoplease).

The Encima Group Donates Tag Management Referrals, Maintains Neutrality

Newark, DE – August 18, 2014 – Analytics consultancy The Encima Group, is pleased to announce the donation of several thousand dollars in referral fees earned through the recent recommendation and subsequent implementation of Signal’s technology platform. Signal’s Tag Management system (formerly BrightTag) was chosen by two of Encima’s major pharmaceutical clients as the best-in-class tag management solution. For one Encima client, their prior tag management system took too much time to use and was expensive. It was replaced with Signal and the client is already seeing ongoing tag maintenance now taking less than 10% of the time that it did before. For another client, Signal was deployed together with an enterprise site analytics solution across several high-profile Web sites making ongoing tag maintenance a snap.

Newark, DE – August 18, 2014 – Analytics consultancy The Encima Group, is pleased to announce the donation of several thousand dollars in referral fees earned through the recent recommendation and subsequent implementation of Signal’s technology platform. Signal’s Tag Management system (formerly BrightTag) was chosen by two of Encima’s major pharmaceutical clients as the best-in-class tag management solution. For one Encima client, their prior tag management system took too much time to use and was expensive. It was replaced with Signal and the client is already seeing ongoing tag maintenance now taking less than 10% of the time that it did before. For another client, Signal was deployed together with an enterprise site analytics solution across several high-profile Web sites making ongoing tag maintenance a snap.

David Clunes, CEO and Founder of The Encima Group explains, “With technology vendors often jockeying on new capabilities, we prefer let them do what they do best without getting caught up. We purposefully do not recommend the technology platforms that make us the most money, instead we recommend what is best for our client’s long-term analytics success. Donations like this help us continue to maintain our neutrality – all while doing some good for the industry.”

The Encima Group, known best for its independent analytics and digital operations services often finds itself recommending platforms for clients. Sometimes viewed as another value-added reseller, The Encima Group sees itself as an extension of their clients’ organizations and vigorously maintains its “Switzerland” status. That sensibility extends from the firm’s analytics practice which uniquely eschews agency media buying and creative services to focus on providing clients with both objective performance reporting and unbiased campaign optimization recommendation.

Clunes continues, “When it comes to analytics, more objectivity is always a good thing. We feel that this is a great way of paying it forward and that hopefully other firms get the idea.” By sharing the referral fees that it earned, Encima is simultaneously investing in two worthy causes known to analytics professionals worldwide: The Digital Analytics Association, a global organization for digital analytics professionals and Piwik, the globally popular open source Web analytics platform.

“The Digital Analytics Association is thrilled by the Encima Group’s donation,” said DAA Board Chair, Jim Sterne. “The funds will be added to our general fund to benefit all members of the DAA. We hope that others in the space will follow Encima’s leadership in this area.” For Piwik, the funds will be used to facilitate continued development of this open-source platform. Available as an alternative to sharing with 3rd parties, Piwik allows digital marketers to control their Web site behavioral data. Maciej Zawadziński, of the Piwik Core Development Team says, “This is great and will help us to further develop an alternative free Web analytics platform.”

About The Encima Group

The Encima Group is an independent analytics consultancy that was recently recognized for its successful growth in the Inc. 5000 (ranking in top 25%). The Encima Group’s mission really is about actionable analytics and flawless execution. Offering an integrated suite of services around multi-channel measurement, tag management, dashboards, technology strategy consulting and marketing operational support, The Encima Group pioneered the notion of Data Stewardship. The Encima Group is based in Newark, DE with offices in Princeton, NJ and Chicago, IL. Its client roster includes leading pharmaceutical companies like Bristol-Myers Squibb, Shire Pharmaceuticals, Otsuka, AstraZeneca and Novo Nordisk.

For more information about The Encima Group, visit www.encimagroup.com. For more information about Signal visit www.signal.co, for Piwki visit www.piwik.org and for the Digital Analytics Association visit www.digitalanalyticsassociation.com.

Media Contact(s)

Jason Mo, Director of Business Development (jmo AT encimagroup DOT com); phone (919) 308-5309; Domenico Tassone, VP Digital Capabilities (dtassone AT encimagroup DOT com); Phone.

Interview with Chango’s CMO

Enjoy!

Eprivacy Issues & Standards – Where Do We Stand?

Recent deck from Anna Long (of the DAA standards Committee).